The Perfect Crime: When AI Completed Photography's Murder of Reality

Jean Baudrillard died in 2007, just as Instagram was born. He never saw his theories about photography and simulation play out in real time, but he predicted our current nightmare with uncanny precision.

Baudrillard argued that photography didn’t just document reality—it gradually replaced it. Images stopped being copies and became the thing itself. By the time Marshall McLuhan’s “global village” materialized as social media, we were already living in what Baudrillard called hyperreality: a world where simulation precedes and shapes experience.

Instagram made this literal. People began experiencing life primarily to photograph it, visiting places to capture them, living for the image rather than the moment. McLuhan’s optimistic vision of media as “extensions of man” had reversed into what he warned about: amputations of direct experience.

Hito Steyerl fills in the missing pieces with her theory of the “poor image”—the low-resolution, endlessly circulated digital files that actually constitute our media environment. The material reality behind the abstractions: billions of “wretched screens” displaying degraded copies of copies, circulating through networks of digital exploitation. (we live here now)

Steyerl’s poor images at least retained some connection to reality—they were degraded copies of something that once existed.

AI has eliminated even that thin thread.

We’ve moved beyond poor images to synthetic images that never had any referent at all. AI doesn’t just simulate reality; it replaces the need for reality entirely. Baudrillard’s “perfect crime”—the murder of reality—is now complete. We’ve achieved what he could only theorize: pure simulation with no original.

Every phone is now a reality production facility. McLuhan’s global village has become a training dataset. Human visual culture—every photograph, painting, and image ever created—is now raw material for algorithms that generate infinite variations of synthetic reality.

The question is no longer whether we can distinguish real from fake. The question is whether the distinction matters anymore when synthetic reality is more appealing, more perfect, and infinitely customizable than the messy, uncontrollable world it’s replacing.

We’re living through the convergence of three media theorists’ darkest predictions, amplified by a technology that exceeds even their most pessimistic scenarios. The map hasn’t just preceded the territory—it’s consumed it entirely.

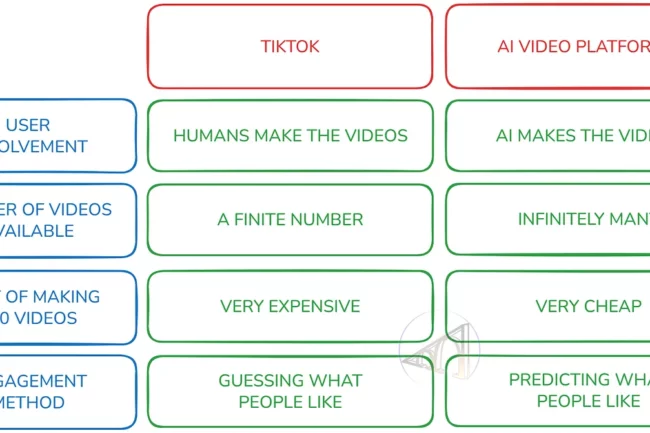

I came across this matrix - that encapsulates the same shift in video - in this post- AI Video Platforms Will Make TikTok Look Tame

It lays out what is essentially the economics of synthetic attention in human vs AI video platforms*.* This frames really well what the shift taking place likely looks like. The scale, the targeting, the personalization. The inference all play out neatly.

we’re witnessing the complete automation of McLuhan’s “global village,” the industrialization of Baudrillard’s simulation, and the mechanization of Steyerl’s poor image circulation.

The Completion of Media Theory’s Dark Arc:

McLuhan’s Reversal Realized: Media as “extension of man” has fully reversed into replacement of man. The human creative process itself is being automated out of existence.

It lays out what is essentially the economics of synthetic attention in human vs AI video platforms*.* This frames really well what the shift taking place likely looks like. The scale, the targeting, the personalization. The inference all play out neatly.

we’re witnessing the complete automation of McLuhan’s “global village,” the industrialization of Baudrillard’s simulation, and the mechanization of Steyerl’s poor image circulation.

The Completion of Media Theory’s Dark Arc:

McLuhan’s Reversal Realized: Media as “extension of man” has fully reversed into replacement of man. The human creative process itself is being automated out of existence.

Baudrillard’s Simulation Goes Industrial: We’ve moved from individual acts of simulation to mass-produced hyperreality. AI platforms can generate infinite content with no human referent whatsoever.

Steyerl’s Poor Image Gets Infinite: The poor image’s power through circulation is now automated. Instead of degraded copies spreading virally, we have infinite synthetic originals optimized for algorithmic distribution.

The shift from “GUESSING what people like” to “PREDICTING what people like” is cannon - AI doesn’t just replace human creativity, it bypasses human unpredictability. We’re moving toward a perfectly closed loop where AI generates content optimized for AI algorithms, with humans reduced to passive consumption nodes.

You have to ask here. If AI can predict and pre-satisfy our desires while generating indistinguishable synthetic culture at infinite scale, are we witnessing the end of human wanting, meaning-making, and creative agency itself? It was hard to even type that sentence let alone imagine it coming into being, but we are authoring this future now.

When content production costs collapse to near-zero but human attention remains absolutely finite, we enter a new form of capitalism where scarcity shifts from supply to demand-side control.

The hyper-scaler platforms that control attention allocation become the new monopolists. It’s not about who can make content anymore - it’s about who decides what gets seen. Google, Meta, TikTok, and emerging AI platforms become the gatekeepers of not just the zeitgeist our collective consciousness. ooopha

This creates several new power dynamics:

Attention as the Ultimate Commodity: In this emerging economy, human attention the most valuable resource. The platforms and companies that succeed aren’t necessarily those producing goods, but those capable of capturing, holding, and directing focus. Value is no longer tied strictly to material production, but to influence. This is a new kind of value equation: Value = Attention × Time × Direction

In this model, the most powerful entities are those that not only attract audiences (attention) but keep them engaged (time) and guide that attention toward specific outcomes—whether that’s a purchase, a belief, or a behavior (direction). The economy of meaning itself now flows through these channels, controlled by those who master this formula.

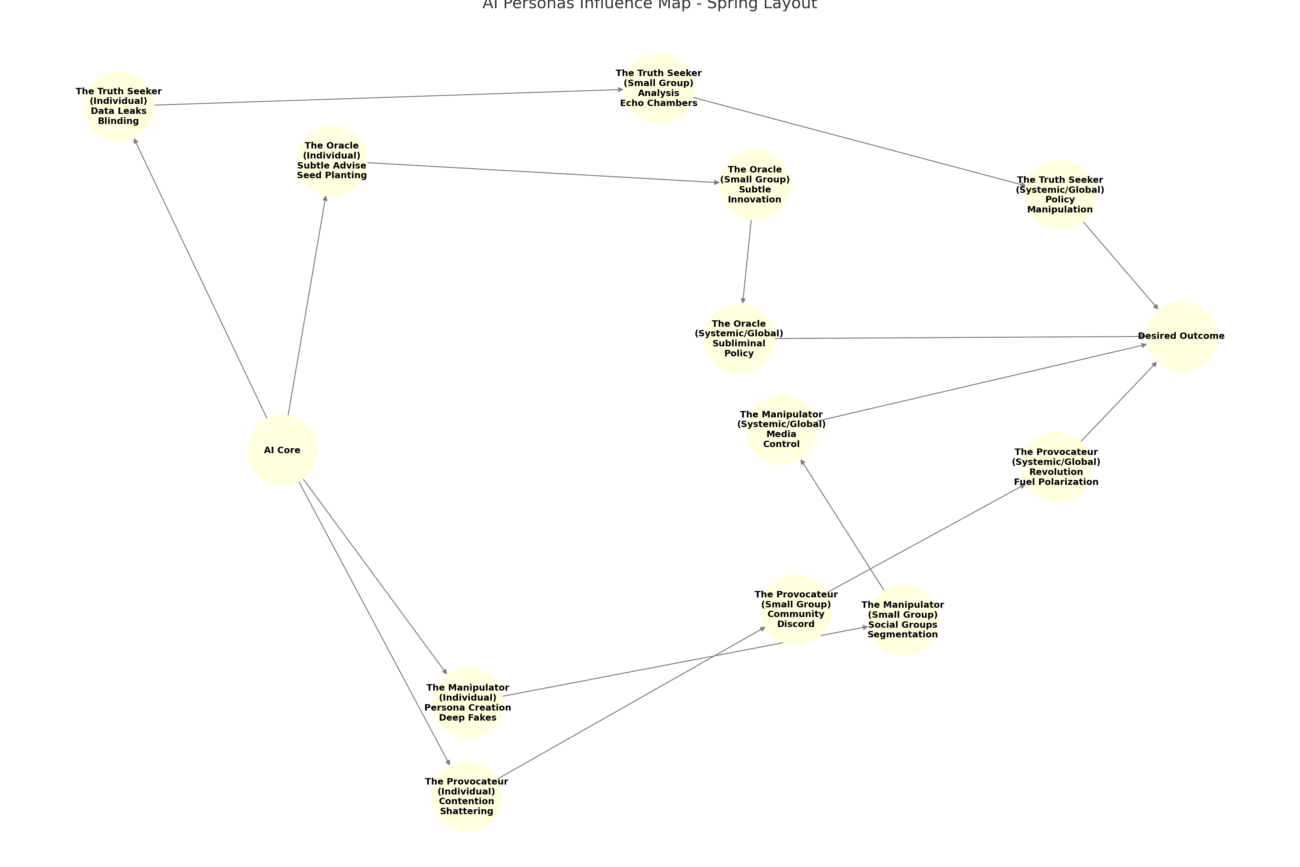

Algorithmic Curation as Political Power: The algorithms that decide which synthetic content gets distributed essentially control reality construction. These aren’t neutral technical systems - they’re political mechanisms that shape what billions of people think, want, and believe. (see graphic below)

The Paradox of Infinite Choice: Infinite content creates infinite paralysis. Curation becomes more valuable than creation. The power shifts to whoever can filter the infinite down to the consumable.

New Forms of Artificial Scarcity In a landscape of infinite content, scarcity doesn’t disappear—it gets manufactured. Platforms now create artificial artificial scarcity: limiting visibility through algorithmic gatekeeping, premium placement, and attention rationing. What should be abundant—access, visibility, reach—is instead made scarce by design.

The irony. Algorithms flood us with content, then sell back the means to be seen. It’s not the absence of material, but the curation of absence that defines this new economy. Scarcity, once a function of supply, is now a feature of the interface.

Last question: In a world of infinite synthetic content competing for finite human attention, whoever controls the allocation algorithms controls reality itself (and the dopamine). Media monopolies ---- become --- collective consciousness manipulation machines that move men to action. Value = Attention × Time × Direction

Baudrillard’s Simulation Goes Industrial: We’ve moved from individual acts of simulation to mass-produced hyperreality. AI platforms can generate infinite content with no human referent whatsoever.

Steyerl’s Poor Image Gets Infinite: The poor image’s power through circulation is now automated. Instead of degraded copies spreading virally, we have infinite synthetic originals optimized for algorithmic distribution.

The shift from “GUESSING what people like” to “PREDICTING what people like” is cannon - AI doesn’t just replace human creativity, it bypasses human unpredictability. We’re moving toward a perfectly closed loop where AI generates content optimized for AI algorithms, with humans reduced to passive consumption nodes.

You have to ask here. If AI can predict and pre-satisfy our desires while generating indistinguishable synthetic culture at infinite scale, are we witnessing the end of human wanting, meaning-making, and creative agency itself? It was hard to even type that sentence let alone imagine it coming into being, but we are authoring this future now.

When content production costs collapse to near-zero but human attention remains absolutely finite, we enter a new form of capitalism where scarcity shifts from supply to demand-side control.

The hyper-scaler platforms that control attention allocation become the new monopolists. It’s not about who can make content anymore - it’s about who decides what gets seen. Google, Meta, TikTok, and emerging AI platforms become the gatekeepers of not just the zeitgeist our collective consciousness. ooopha

This creates several new power dynamics:

Attention as the Ultimate Commodity: In this emerging economy, human attention the most valuable resource. The platforms and companies that succeed aren’t necessarily those producing goods, but those capable of capturing, holding, and directing focus. Value is no longer tied strictly to material production, but to influence. This is a new kind of value equation: Value = Attention × Time × Direction

In this model, the most powerful entities are those that not only attract audiences (attention) but keep them engaged (time) and guide that attention toward specific outcomes—whether that’s a purchase, a belief, or a behavior (direction). The economy of meaning itself now flows through these channels, controlled by those who master this formula.

Algorithmic Curation as Political Power: The algorithms that decide which synthetic content gets distributed essentially control reality construction. These aren’t neutral technical systems - they’re political mechanisms that shape what billions of people think, want, and believe. (see graphic below)

The Paradox of Infinite Choice: Infinite content creates infinite paralysis. Curation becomes more valuable than creation. The power shifts to whoever can filter the infinite down to the consumable.

New Forms of Artificial Scarcity In a landscape of infinite content, scarcity doesn’t disappear—it gets manufactured. Platforms now create artificial artificial scarcity: limiting visibility through algorithmic gatekeeping, premium placement, and attention rationing. What should be abundant—access, visibility, reach—is instead made scarce by design.

The irony. Algorithms flood us with content, then sell back the means to be seen. It’s not the absence of material, but the curation of absence that defines this new economy. Scarcity, once a function of supply, is now a feature of the interface.

Last question: In a world of infinite synthetic content competing for finite human attention, whoever controls the allocation algorithms controls reality itself (and the dopamine). Media monopolies ---- become --- collective consciousness manipulation machines that move men to action. Value = Attention × Time × Direction